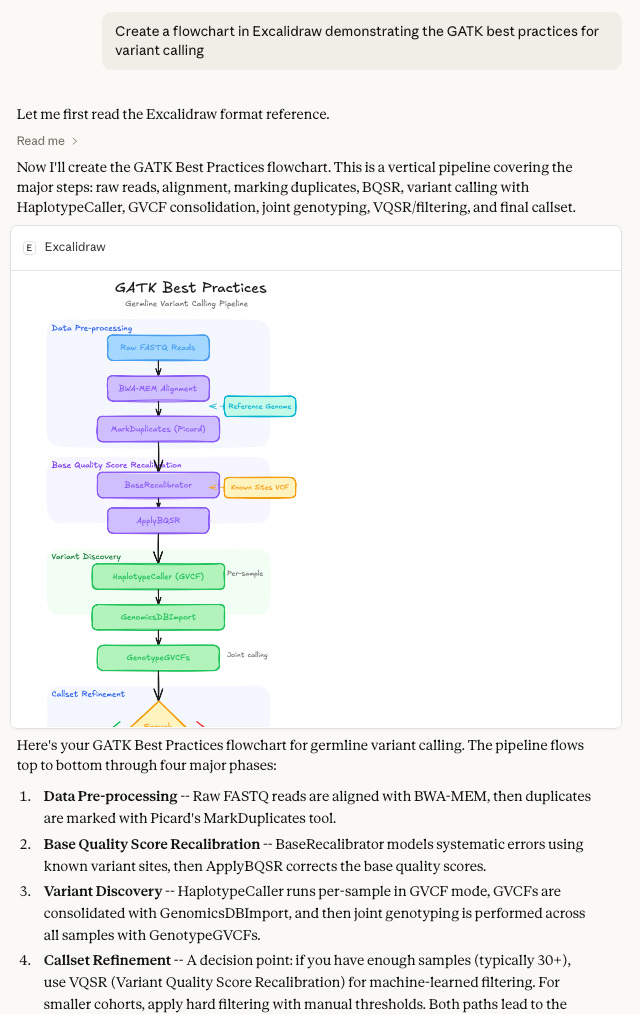

Make diagrams with draw.io using Claude project instructions or MCP, or with Excalidraw using the connector.

Make diagrams with draw.io using Claude project instructions or MCP, or with Excalidraw using the connector.

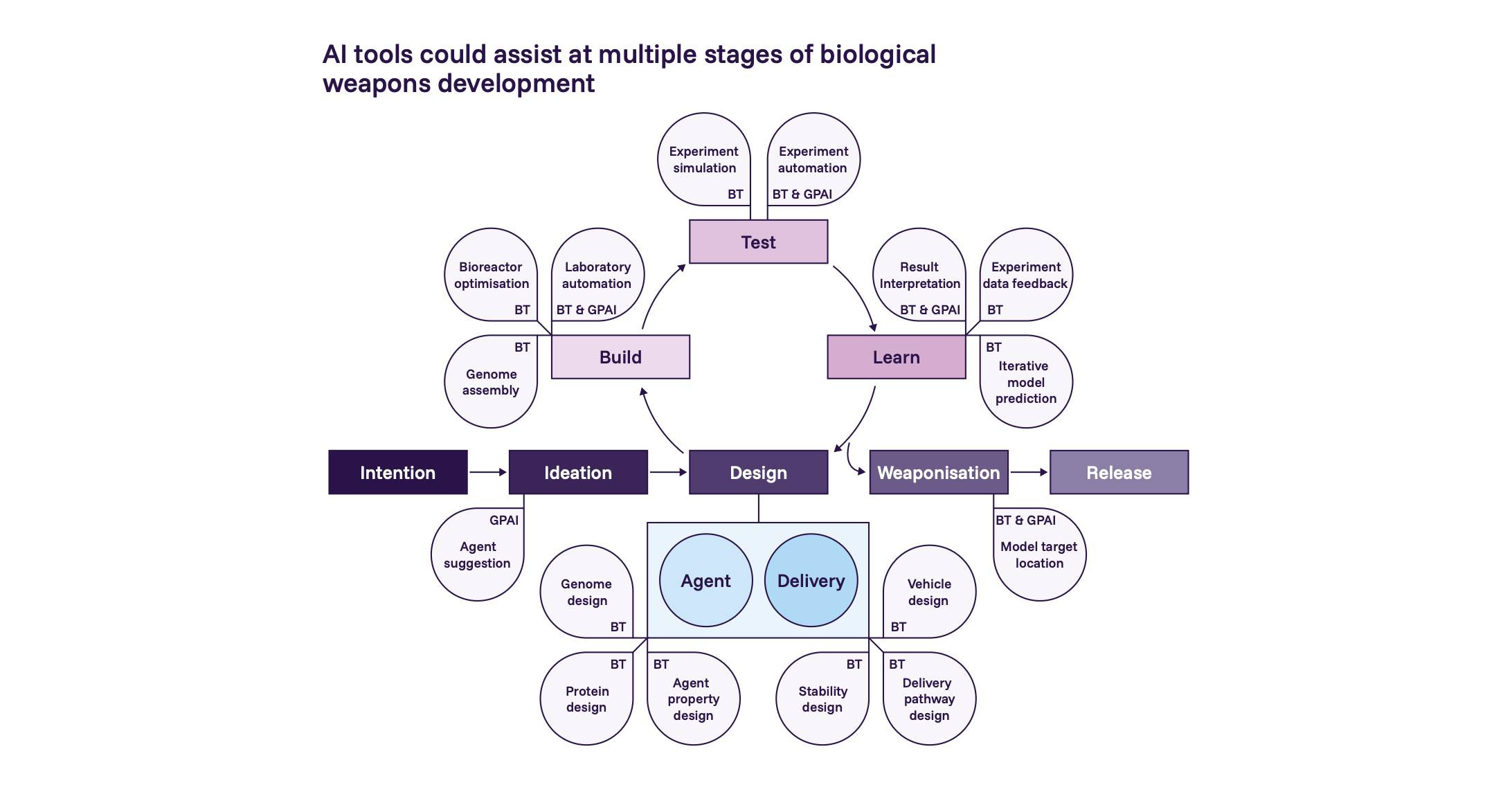

Parallel proposals target training data and model access to reduce biosecurity risk

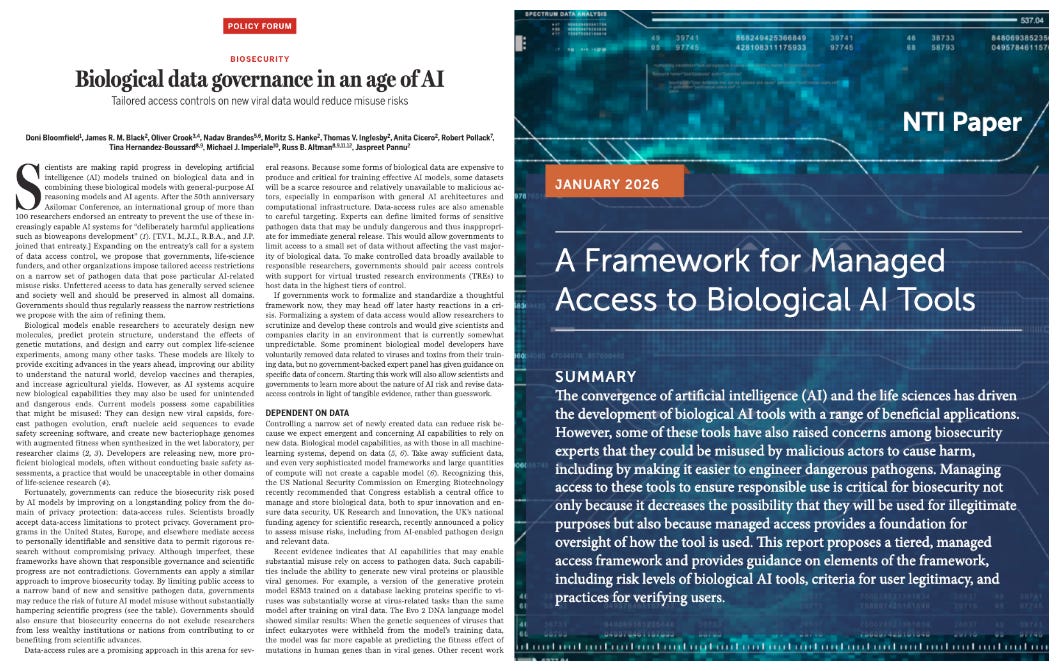

Chapter 3 of the National Academies report on AIxBio: Biosecurity implications for what AI can and can't do in biology today.

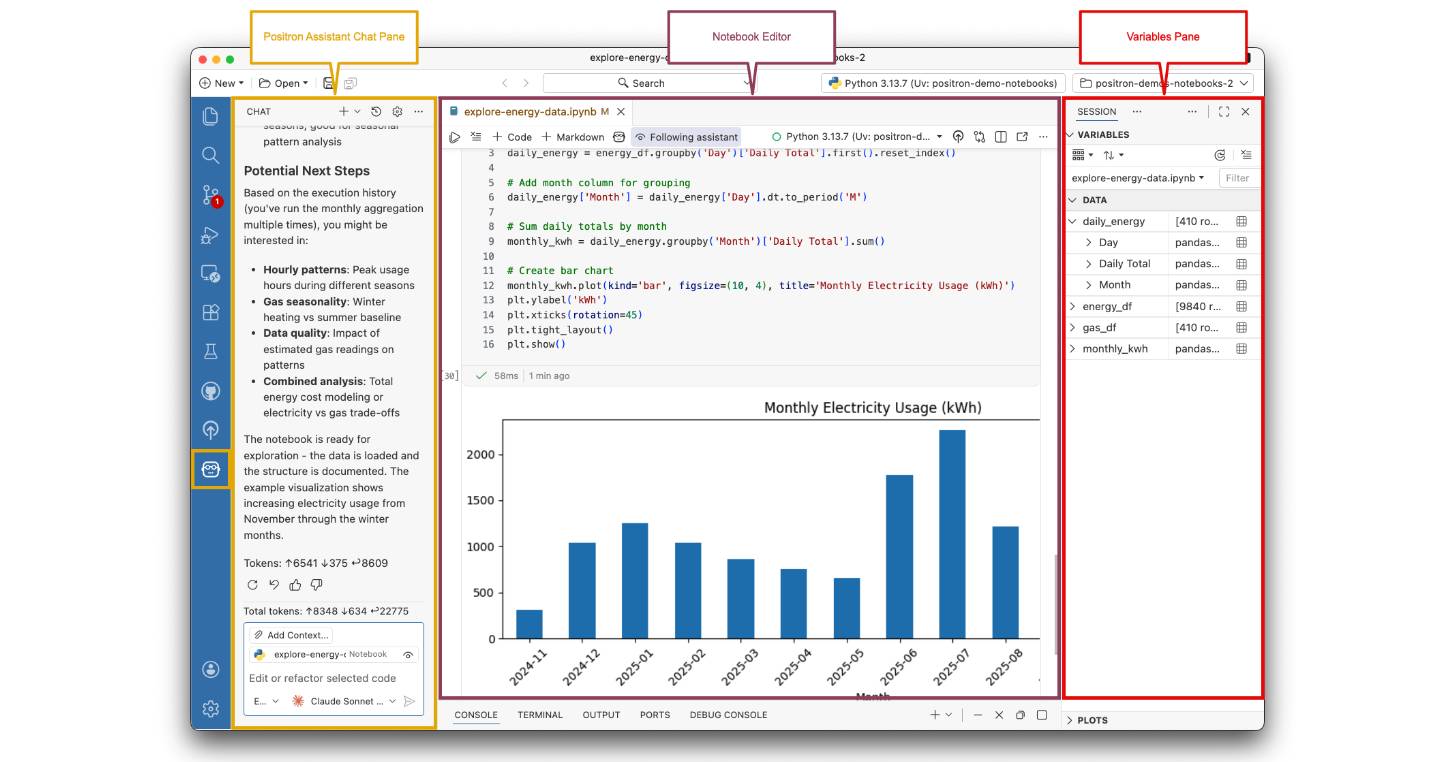

Positron + Jupyter, Claude Opus 4.6, Vicki Boykis at AMLC, Emily Riederer, Wes McKinney, Codex, CC for science, R updates (R Data Scientist, R Works, R Weekly, R-Ladies, rOpenSci), AlphaGenome, ...

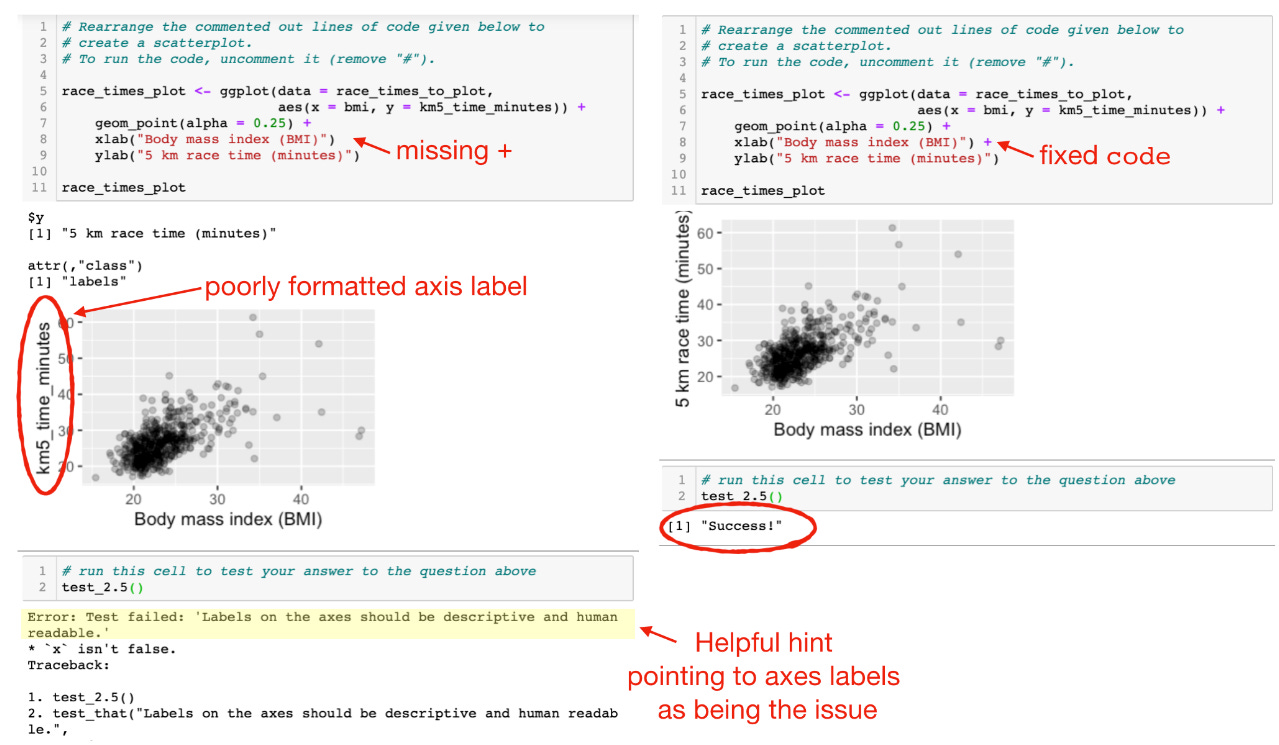

A new paper from Tiffany Timbers (UBC) and Mine Çetinkaya-Rundel (Duke), and lessons I learned from my time with Software Carpentry

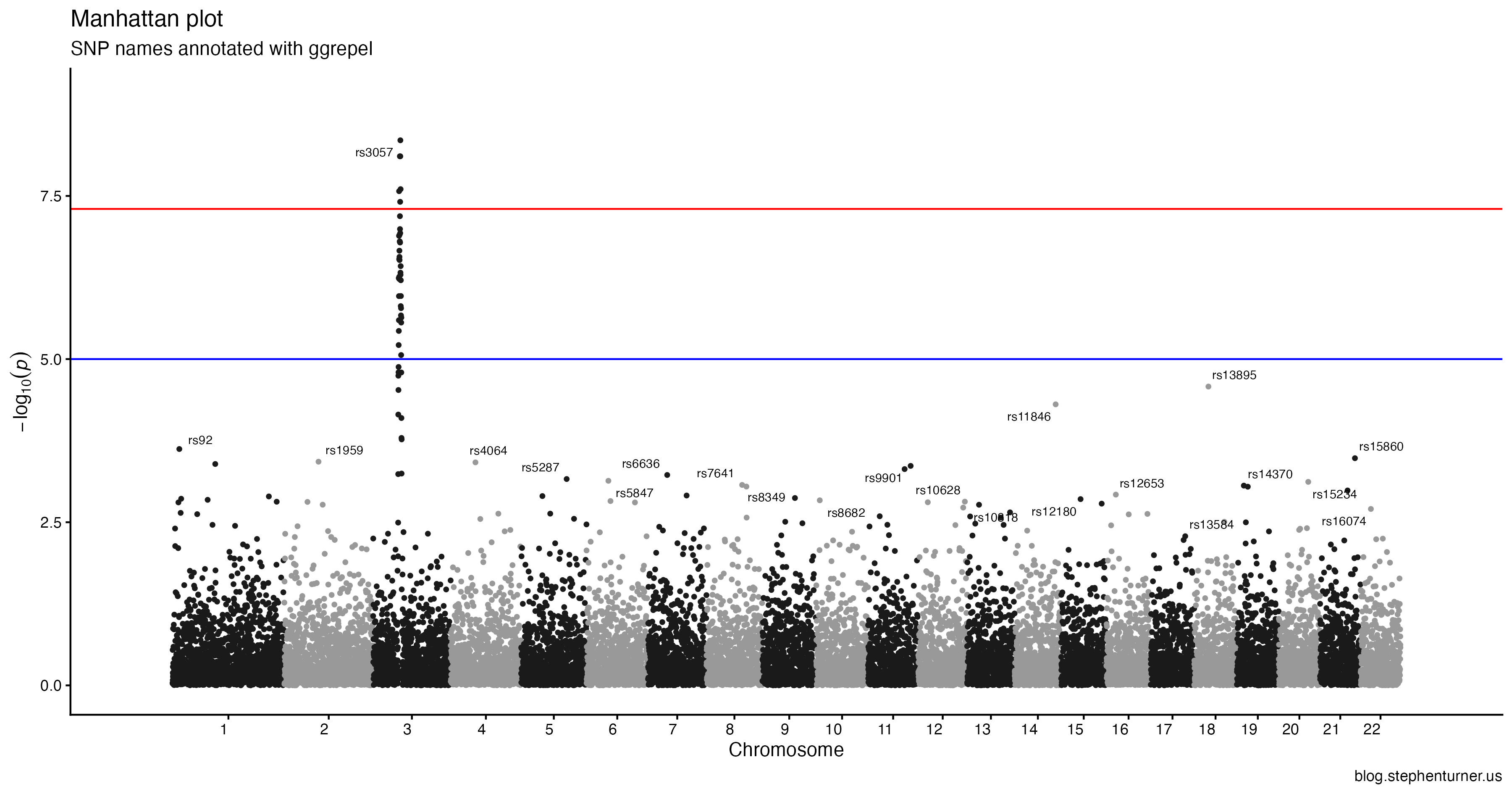

Vibe-updating my old qqman R package to ggplot2 with plan+execute.

Notes from the 2026 International AI Safety Report

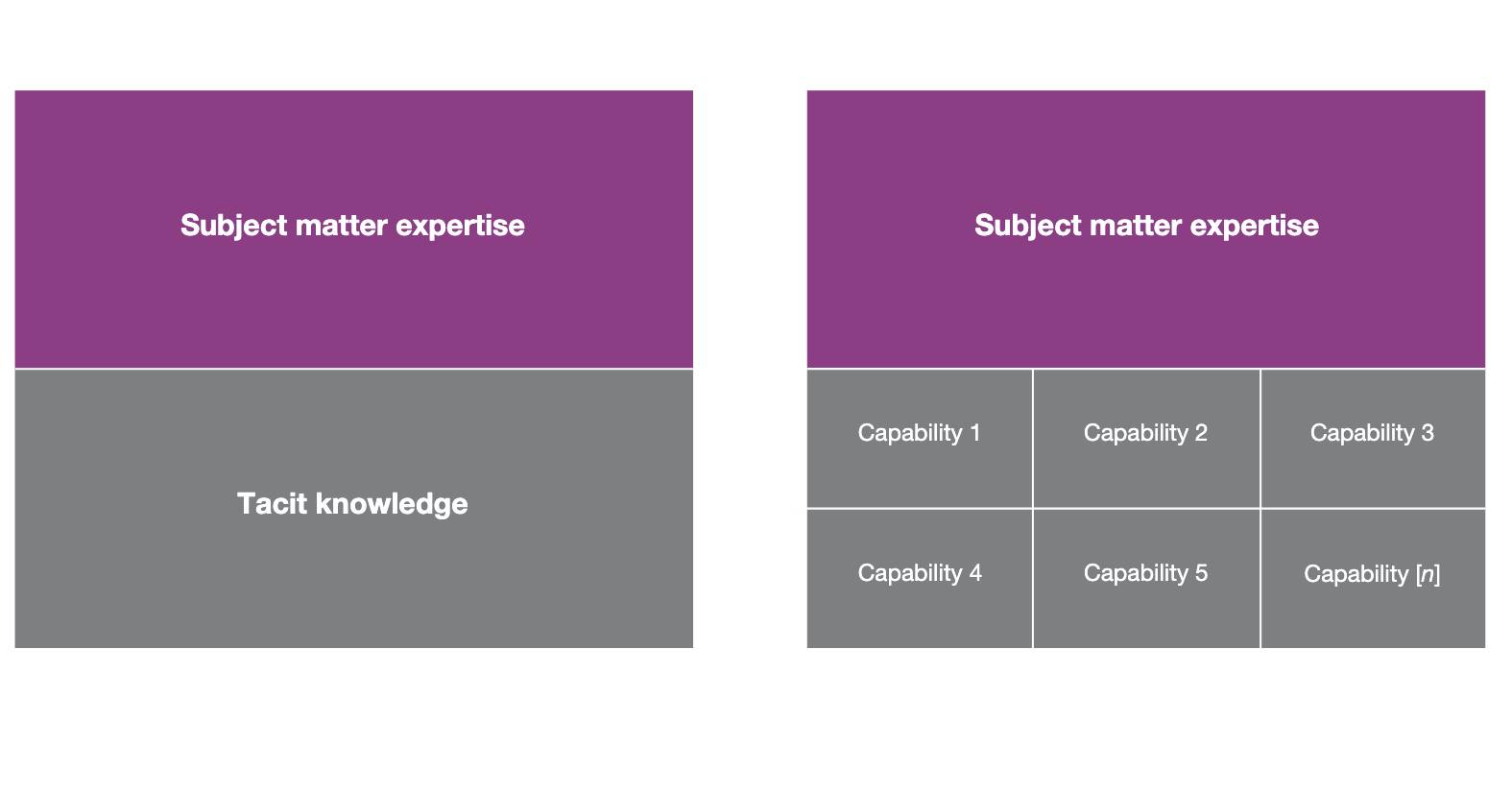

What's "tacit knowledge" in biosecurity, and is it really a barrier? A look at the 2025 RAND paper "Contemporary Foundation AI Models Increase Biological Weapons Risk." 1.7k words, 8 min reading time.

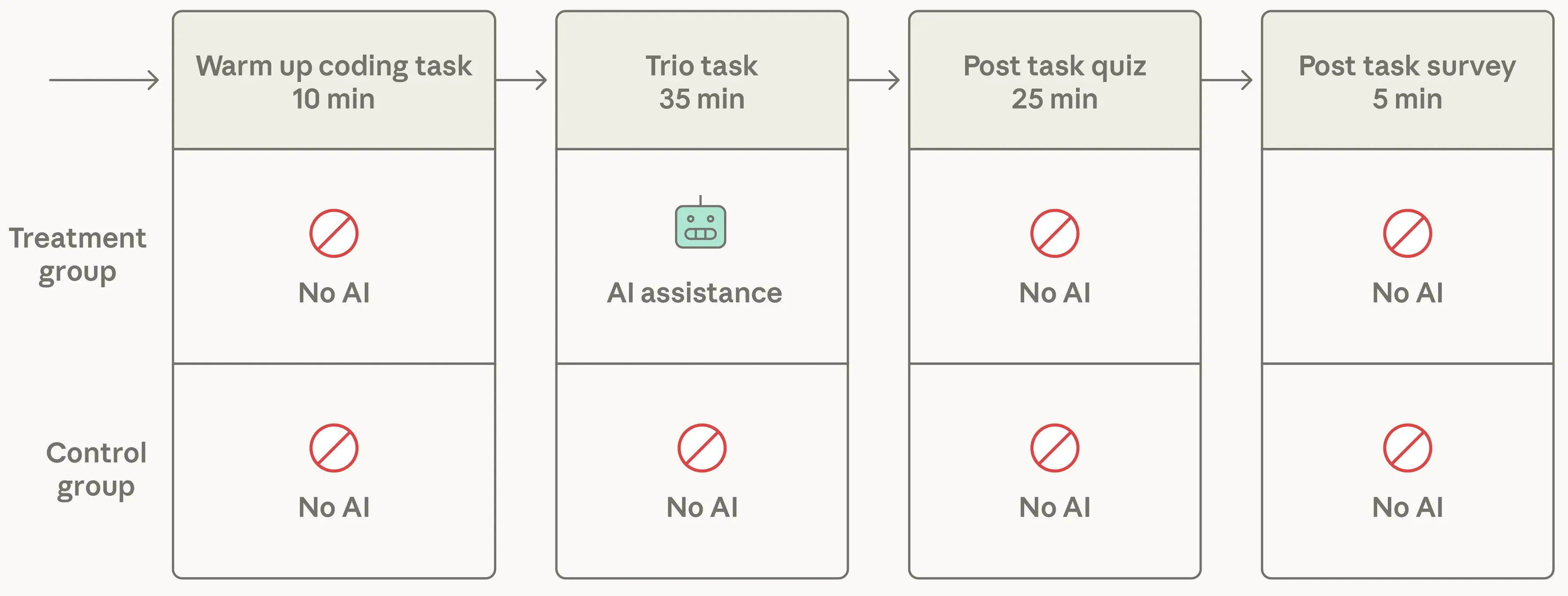

Research from scientists at Anthropic suggests productivity gains come at the cost of skill development.

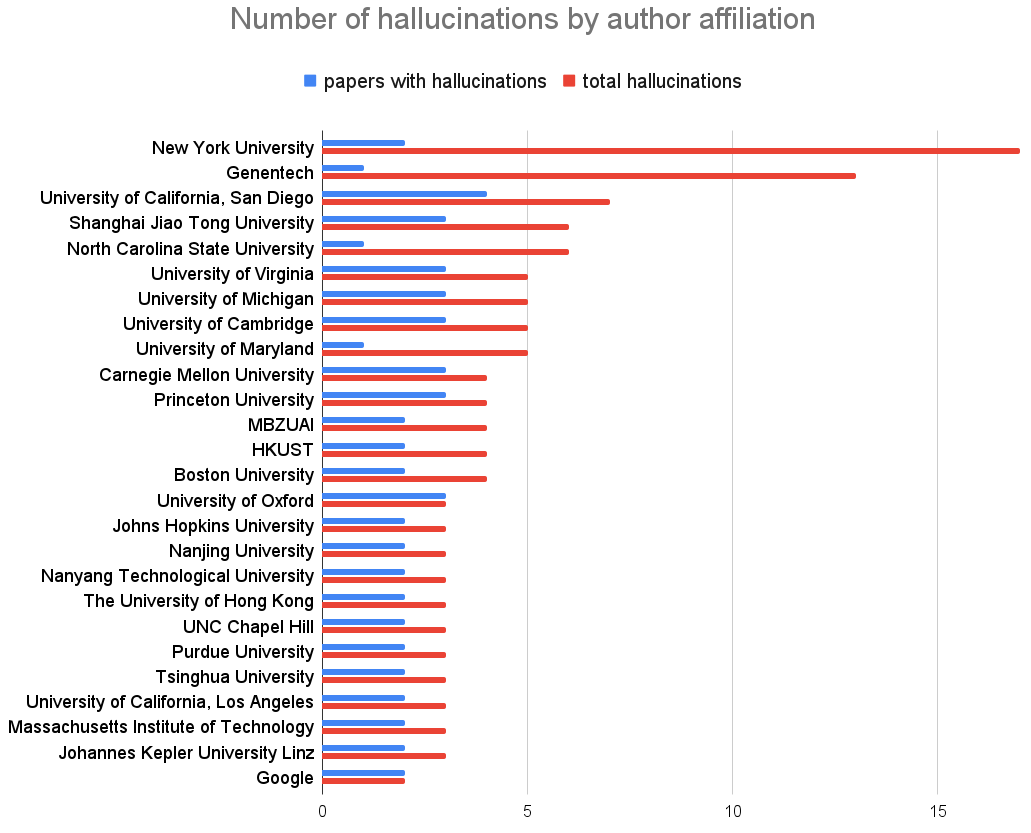

Global Biodiversity Framework, Colossal+Conservation, DARPA GUARDIAN, NTI on AIxBio, NeurIPS hallucitations, Claude in Excel, AI@UVA, Amodei essay, R updates (R Weekly, R Data Scientist), papers.

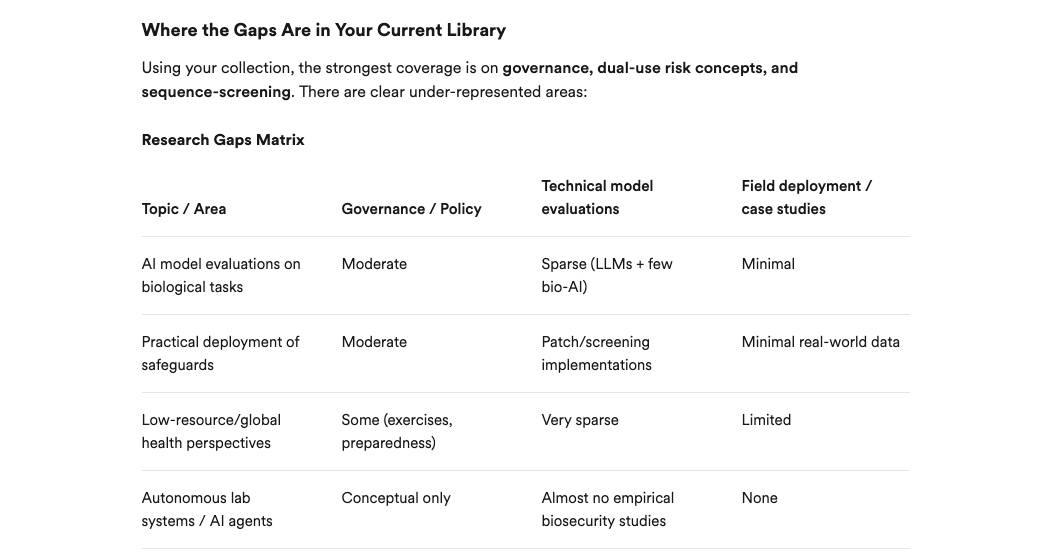

Sync your Zotero library to Consensus AI to ask questions about papers you've saved, and find and fill in gaps in your collections.