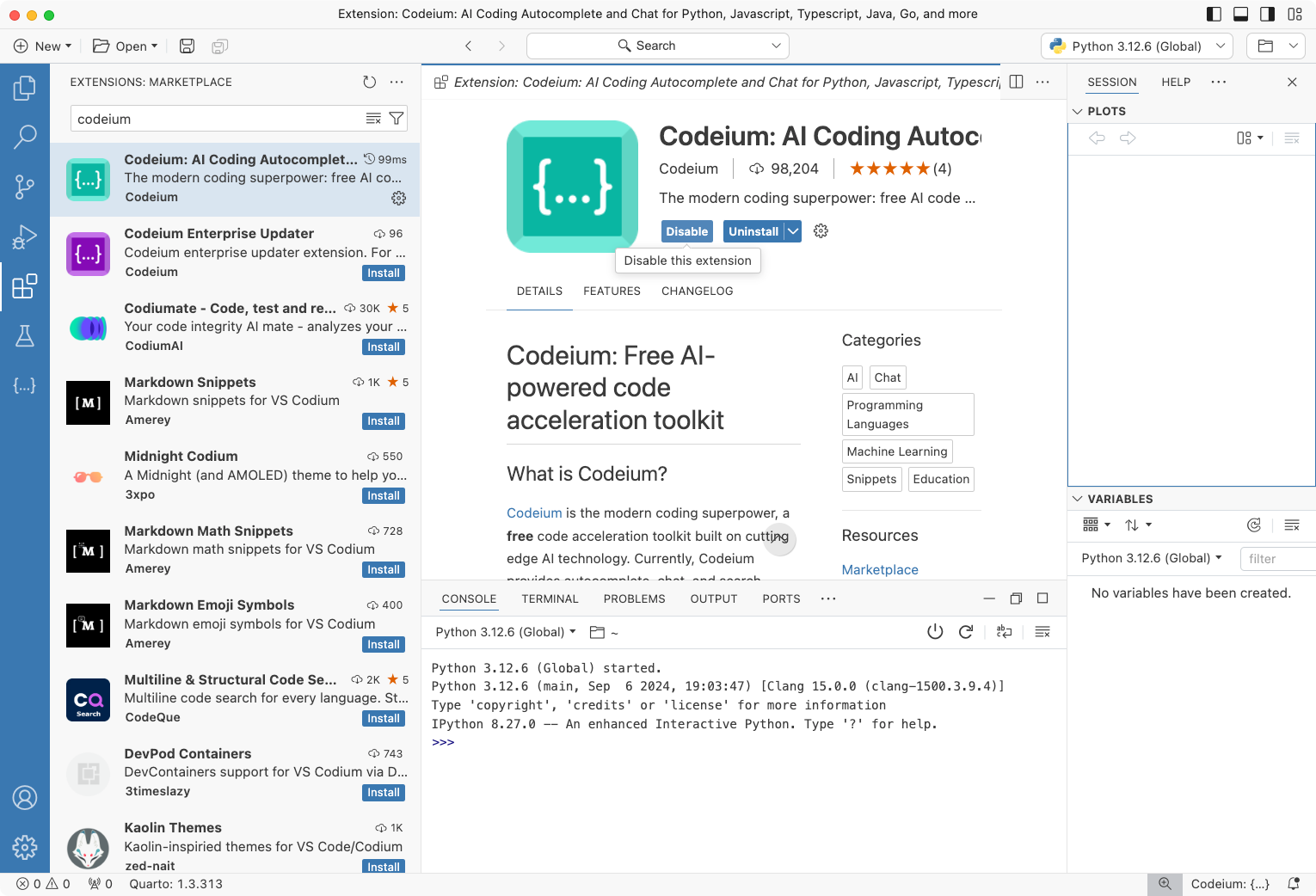

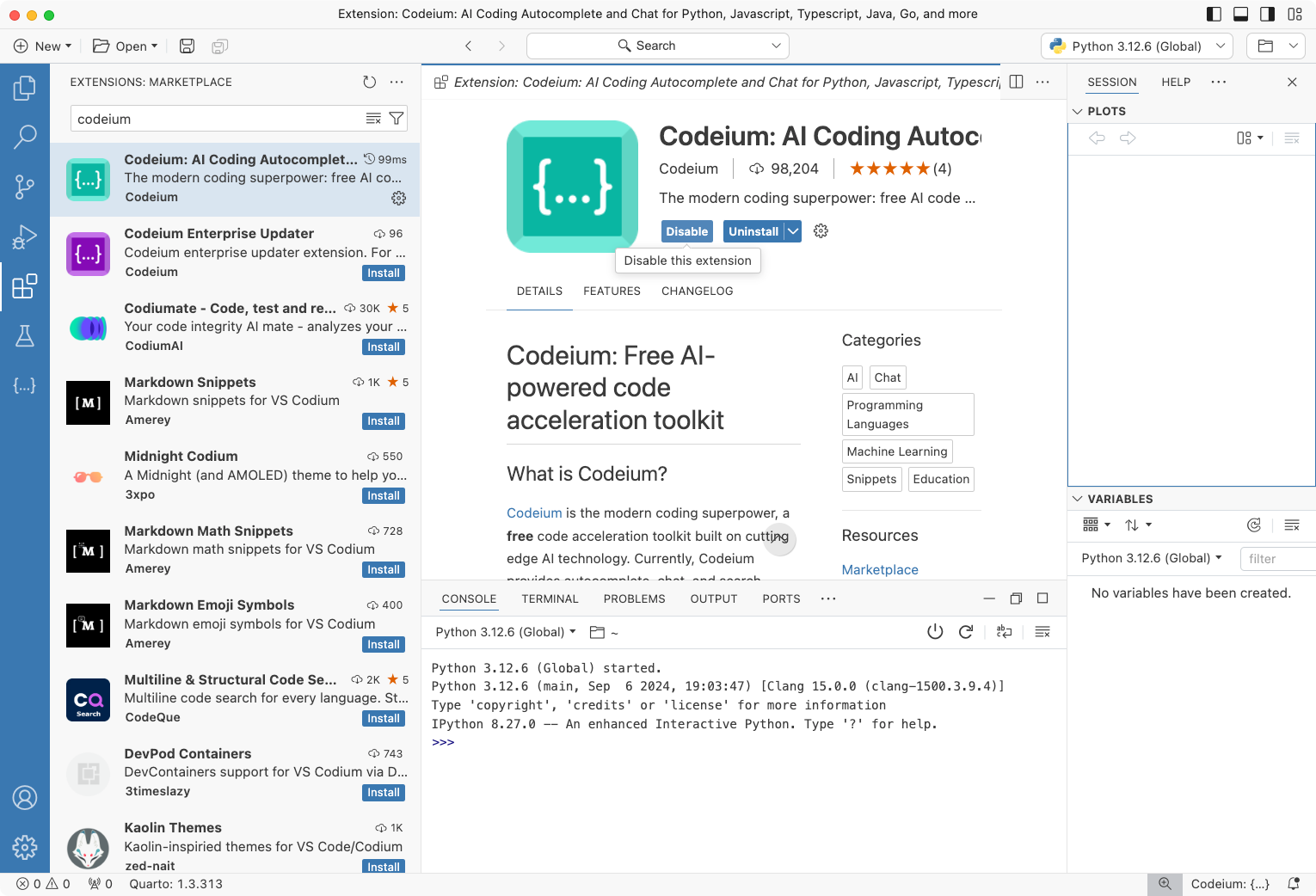

TL;DR: Codeium offers a free Copilot-like experience in Positron. You can install it from the Open VSX registry directly within the extensions pane in Positron.

TL;DR: Codeium offers a free Copilot-like experience in Positron. You can install it from the Open VSX registry directly within the extensions pane in Positron.

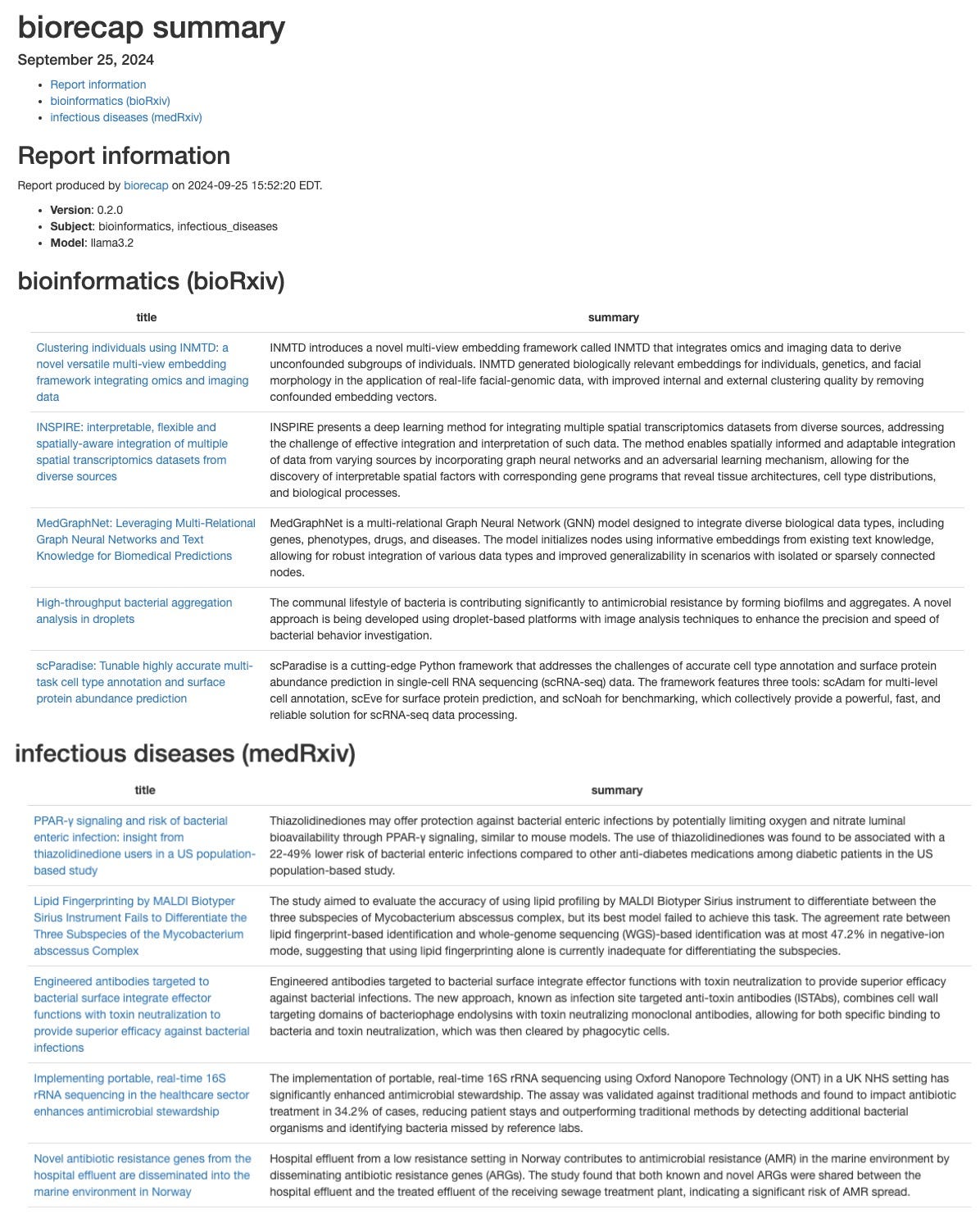

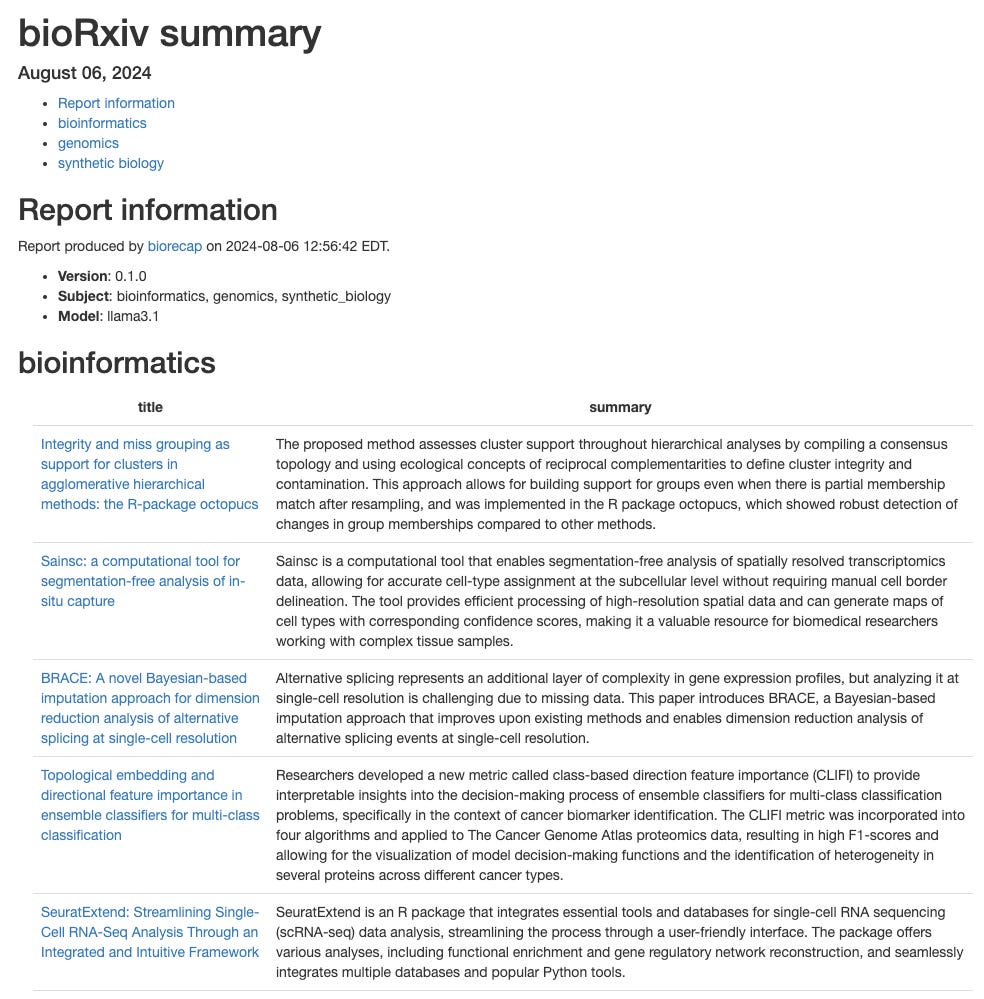

Last month I published a paper and an R package for summarizing preprints from bioRxiv using a local LLM. I wrote about it here: Llama 3.2 was just released today (announcement). The biggest news is the addition of a multimodal vision model, but I was intrigued by the reasonably good performance of the tiny 3B text model. I used this as an excuse to update the biorecap R package.

Google has a new experimental1 tool called Illuminate ( illuminate.google.com ) that takes a link to a preprint2 and creates a podcast discussing the paper. When I tested this with a few preprints, the podcasts it generated are about 6-8 minutes long, featuring a male and female voice discussing the key points of the paper in a conversational style. There are some obvious shortcomings.

Llama 3.1 405B is the first open-source LLM on par with frontier models GPT-4o and Claude 3.5 Sonnet. I’ve been running the 70B model locally for a while now using Ollama + Open WebUI, but you’re not going to run the 405B model on your MacBook.

TL;DR I wrote an R package that summarizes recent bioRxiv preprints using a locally running LLM via Ollama+ollamar, and produces a summary HTML report from a parameterized RMarkdown template.

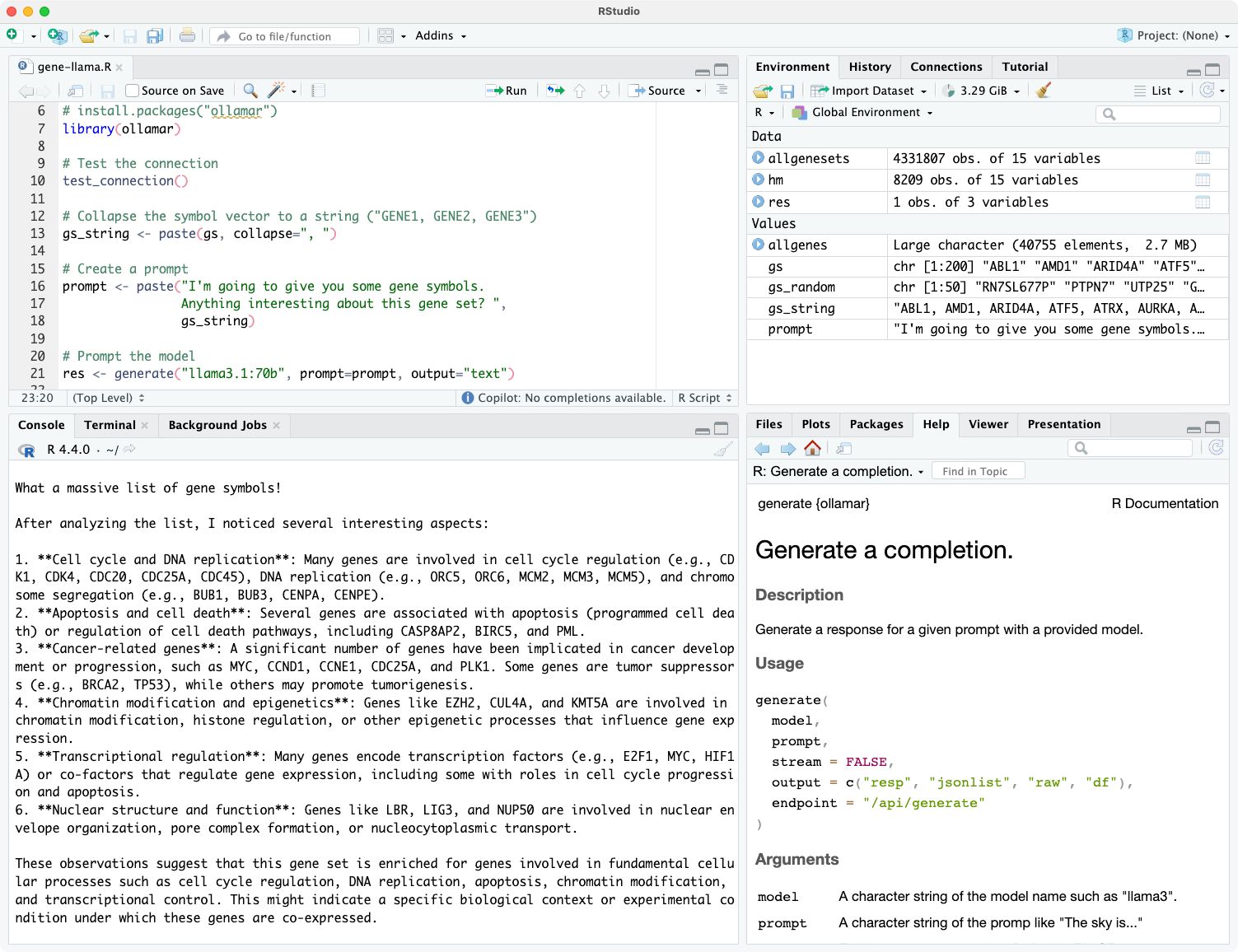

I’ve been using the llama3.1:70b model just released by Meta using Ollama running on my MacBook Pro. Ollama makes it easy to talk to a locally running LLM in the terminal (ollama run llama3.1:70b) or via a familiar GUI with the open-webui Docker container. Here I’ll demonstrate using the ollamar package on CRAN to talk to an LLM running locally on my Mac.