Expanded from a post on X, which I felt didn’t do a good job expressing all of what I meant. The past few years of “AI for life science” has been all about the models: AlphaFold 3, neural-network potentials, protein language models, binder generation, docking, co-folding, ADME/tox prediction, and so on. But Chai-2 (and lots of related work) shows us that the vibes are shifting. Models themselves are becoming just a building block;

Corin Wagen

“You dropped a hundred and fifty grand on a f***** education you coulda' got for a dollar fifty in late charges at the public library.” — Good Will Hunting I was a user of computational chemistry for years, but one with relatively little understanding of how things actually worked below the input-file level.

Recently, Kelsey Piper shared that o3 (at time of writing, one of the latest reasoning models from OpenAI) could guess where outdoor images were taken with almost perfect accuracy. Scott Alexander and others have since verified this claim.

I did not enjoy John Mark Comer’s book The Ruthless Elimination of Hurry . Comer’s book is written to people trying to find meaning in a world that feels rushed, distracted, and isolated.

In scientific computation, where I principally reside these days, there’s a cultural and philosophical divide between physics-based and machine-learning-based approaches. Physics-based approaches to scientific problems typically start with defining a simplified model or theory for the system.

With apologies to Andrew Marvell. If you haven’t read “To His Coy Mistress”, I fear this won’t make much sense. Had we but funding enough and time, This coyness, founder, were no crime. We would sit down, and think which way To build, and pass our slow run-rate’s day. Thou by the Potomac’s side Shouldst SBIRs find; I by the tide Of El Segundo would complain.

In 2007, John Van Drie wrote a perspective on what the next two decades of progress in computer-assisted drug design (CADD) might entail. Ash Jogalekar recently looked back at this list, and rated the progress towards each of Van Drie’s goals on a scale from one to ten.

This post is an attempt to capture some thoughts I have about ML models for predicting protein–ligand binding affinity, sequence- and structure-based approaches to protein modeling, and what the interplay between generative models and simulation may look like in the future. I have a lot of open questions about this space, and Abhishaike Mahajan’s recent Socratic dialogue on DNA foundation models made me curious to try the dialogue format here.

(This is a bit of a departure from my usual chemistry-focused writing.) Fasting is an important part of many religious traditions, but modern Protestant Christians don’t really have a unified stance on fasting (and have opposed systematic fasts for a while). That’s not to say that Protestants don’t fast, though: over just the past few years, I’ve met people doing water-only fasts, juice fasts, dinner-only fasts, “social media” fasts, and many

(Previously: 2022, 2023.) #1. Baldassar Castiglione, The Book of the Courier This book gets cited from time to time as a sort of historical guide to "being cool," since the characters spend some time discussing the idea of sprezzatura , basically grace or effortlessness. More interesting to me was the differences between Renaissance conceptions of virtue, character, &

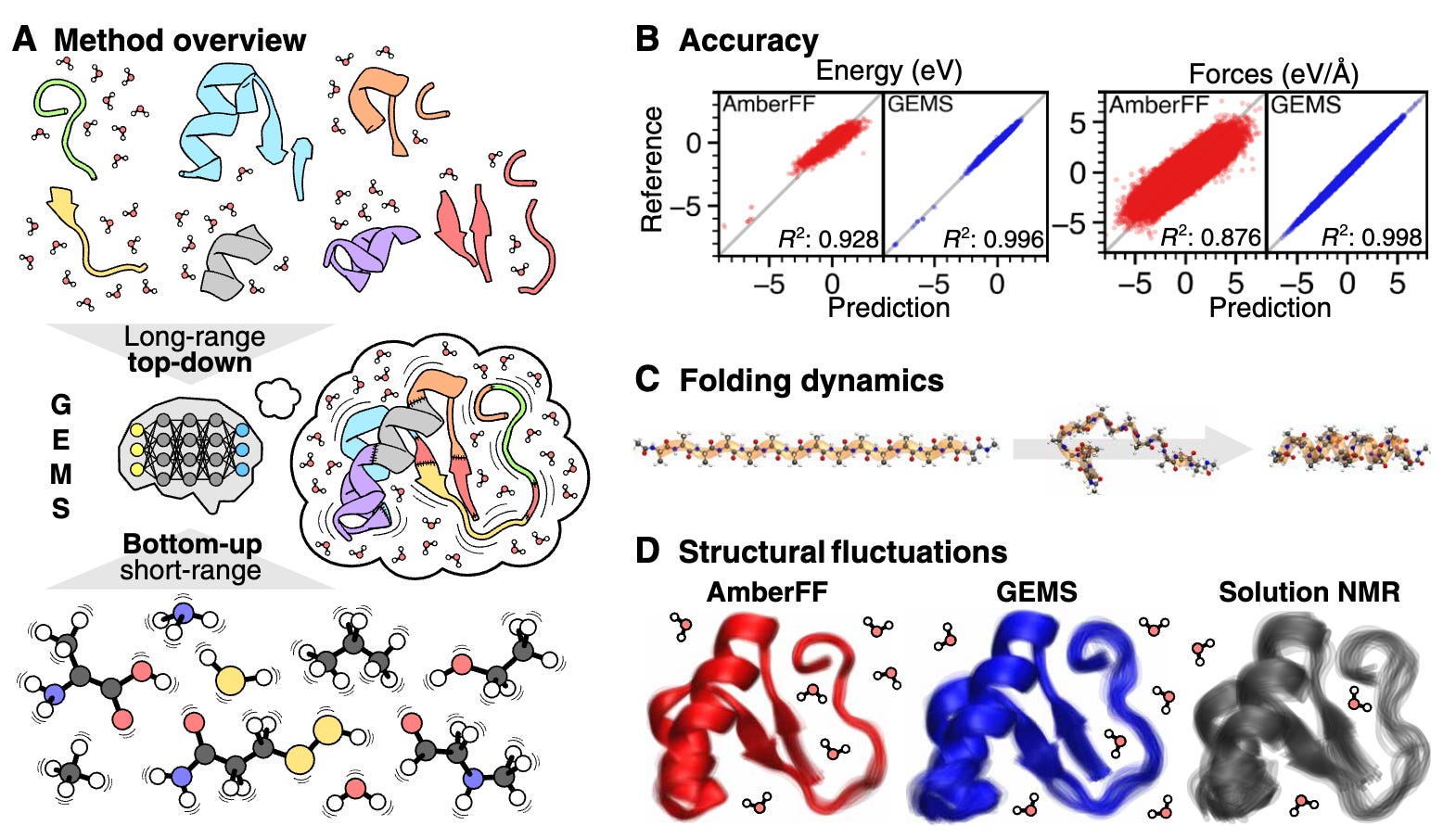

This post assumes some knowledge of molecular dynamics and forcefields/molecular mechanics. For readers unfamiliar with these topics, Abhishaike Mahajan has a great guide to these topics on his blog. Although forcefields are commonplace in all sorts of biomolecular simulation today, there’s a growing body of evidence showing that they often give unreliable results.