Last night we had our first event in the newly (re-)launched Charlottesville R Users (CRU) group. We had about 30 or so attendees — about half from academia and half from industry, with a few from local government organizations.

Last night we had our first event in the newly (re-)launched Charlottesville R Users (CRU) group. We had about 30 or so attendees — about half from academia and half from industry, with a few from local government organizations.

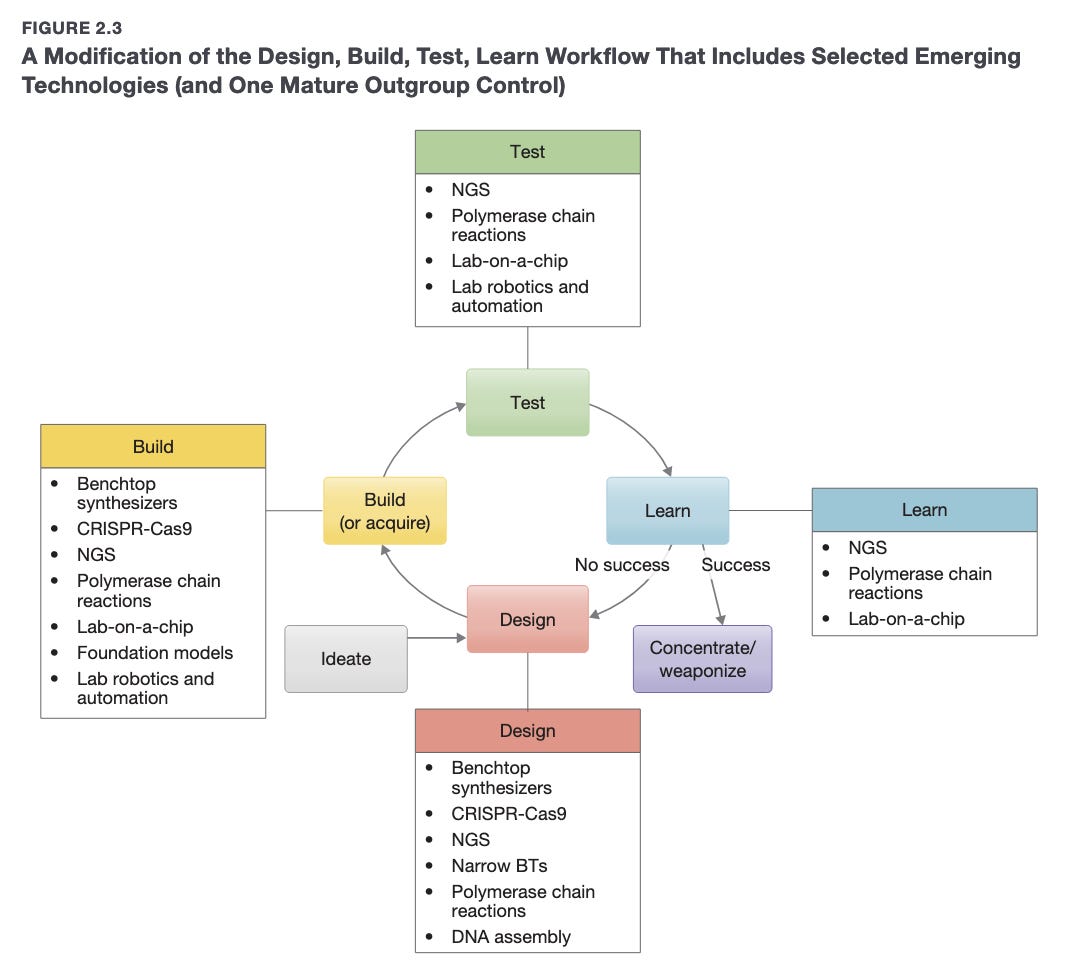

Happy Friday, colleagues. Lots going on this week. Once again I’m going through my long list of idle browser tabs trying to catch up where I can. ElevenReader (no affiliation) has been helpful to catch up this week! Subscribe now The Arc Institute reported the first ever viable genomes with genome language models.

This month’s debate: Should mirror life research be stopped? The idea of mirror life has captured imaginations and triggered warnings in equal measure.

Happy Friday, colleagues. Once again I’m getting ready for the weekend, going through my long list of idle browser tabs trying to catch up where I can.

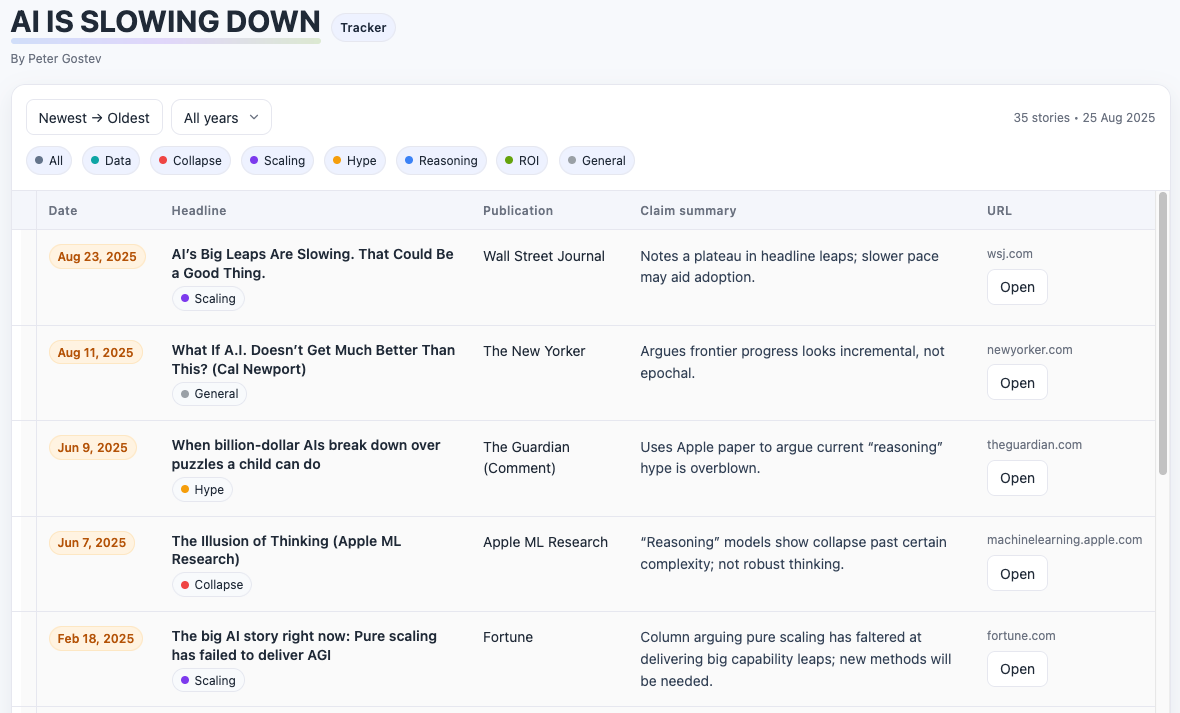

I recently came across this wonderful position paper by Olivia Guest and colleagues, where they pick apart the tech industry’s marketing, hype, & harm, arguing for safeguarding higher education, critical thinking, expertise, academic freedom, & scientific integrity. Guest, O., et al. Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo, 5 Sept.

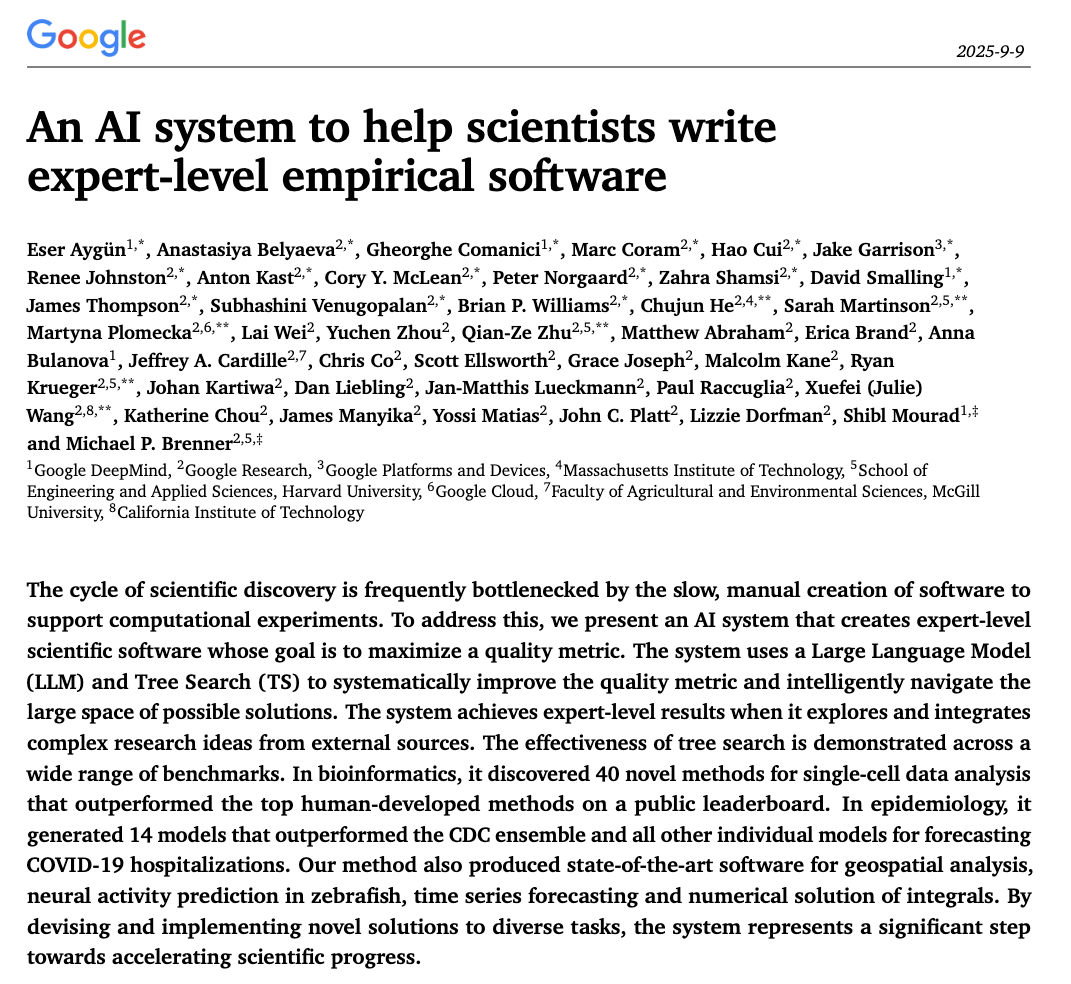

I’ve spent years writing bioinformatics tools (I’ve spent the last 6 years in industry and 90% of these tools I’ll never publish or open-source) and building infectious disease forecasting models (most of these are open-source, like FOCUS for COVID-19 [paper, code], FIPHDE for influenza [paper, code], PLANES for forecast plausibility analysis [paper, code, blog post]). Anyone who has

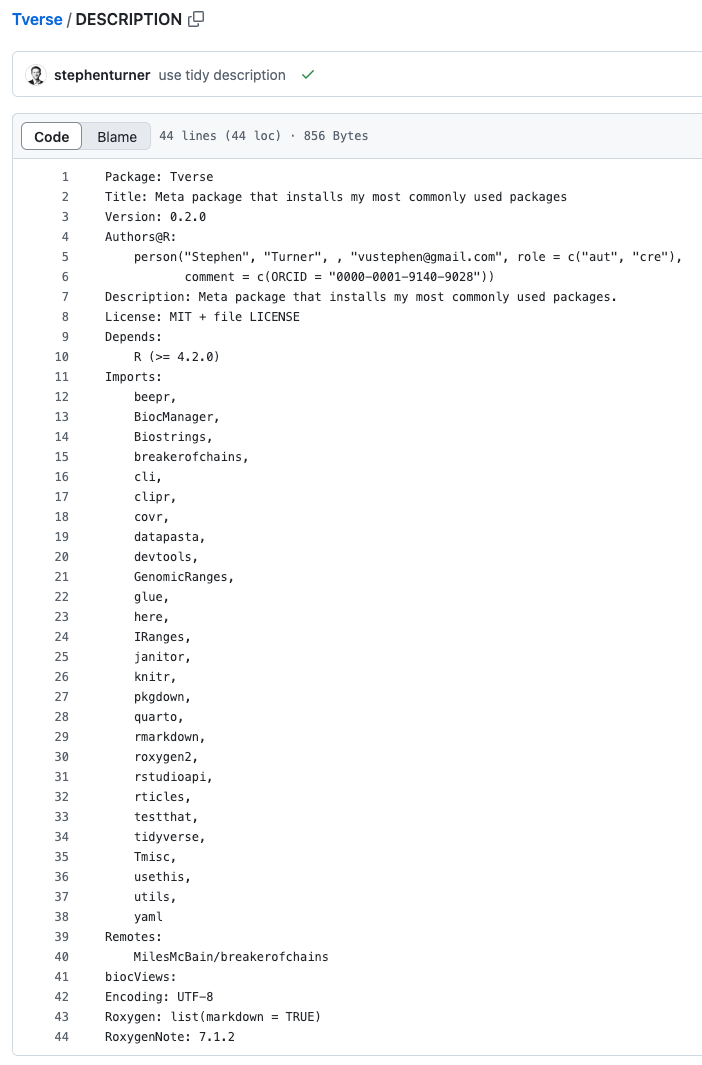

You upgrade your old Intel Macbook Pro for a new M4 MBP. You’re setting up a new cloud VM on AWS after migrating away from GCP. You get an account on your institution’s new HPC. You have everything just so in your development environment, and now you have to remember how to set everything up again. I just started a new position, and I’m doing this right now.

Happy Friday, colleagues. Somehow it’s September (I did not approve of this). Lots going on this week, and this is my regular attempt to close out my browser tabs I’ve accumulated over the past week with blog posts, podcasts, papers, etc. in AI, data science, genomics, public health, programming, scicomm, and other miscellany.

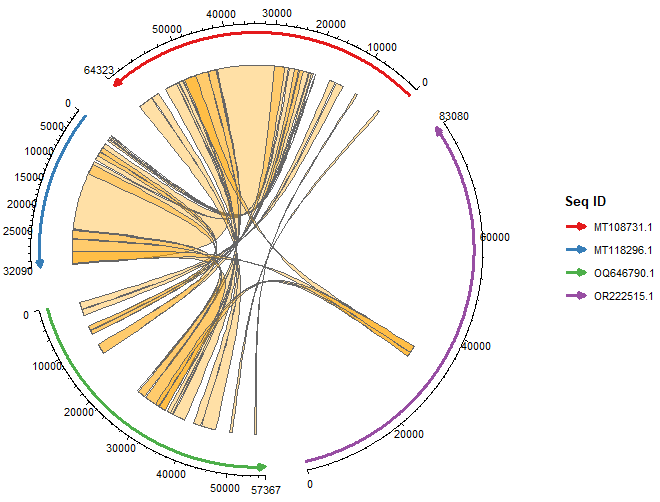

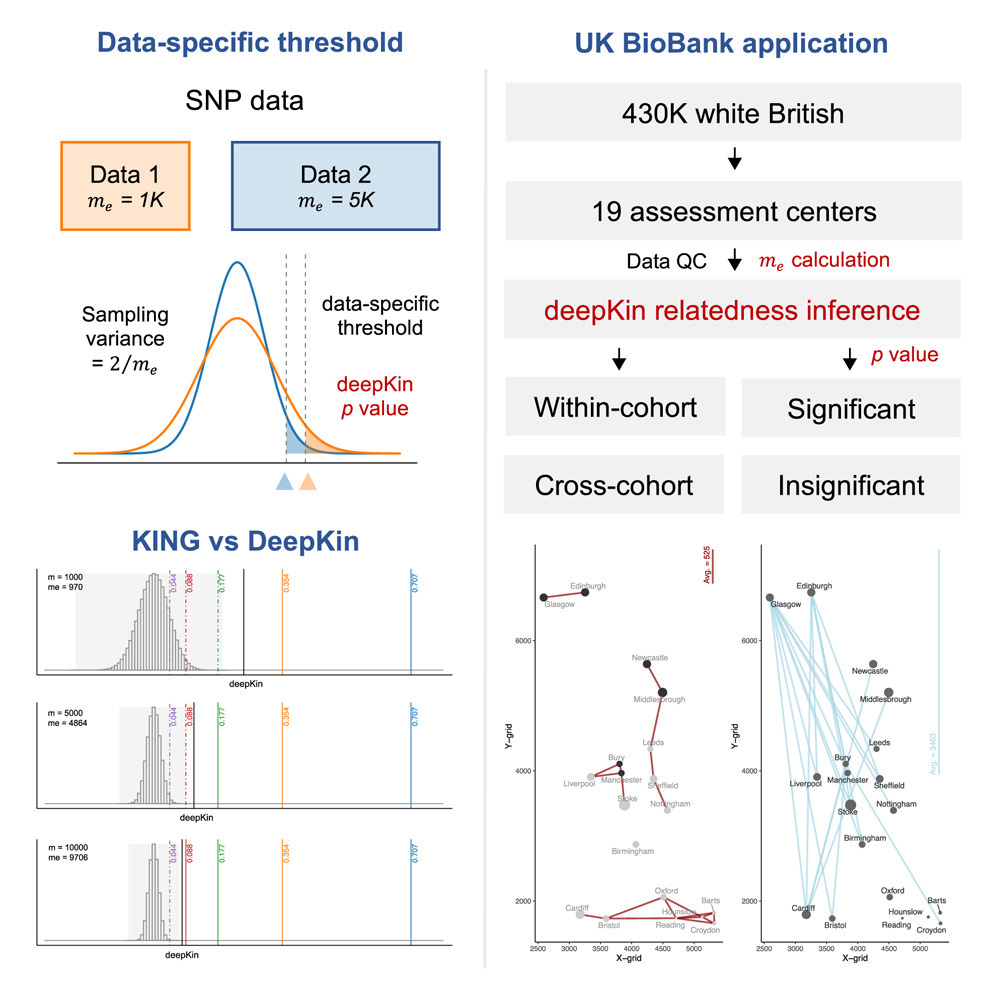

This week’s recap highlights biobank-scale relatedness estimation, SNP calling and haplotype phasing with long RNA-seq reads, predicting expression-altering promoter mutations with deep learning, and cross-species filtering for reducing alignment bias in comparative genomics studies.

Happy Friday, colleagues. August has flown by at warp speed. I started a new job, and my backlog of (semi-) pleasure reading grows longer. This is my regular attempt to close out my browser tabs I’ve accumulated over the past week with blog posts, podcasts, papers, etc. in AI, data science, genomics, public health, programming, scicomm, and other miscellany.

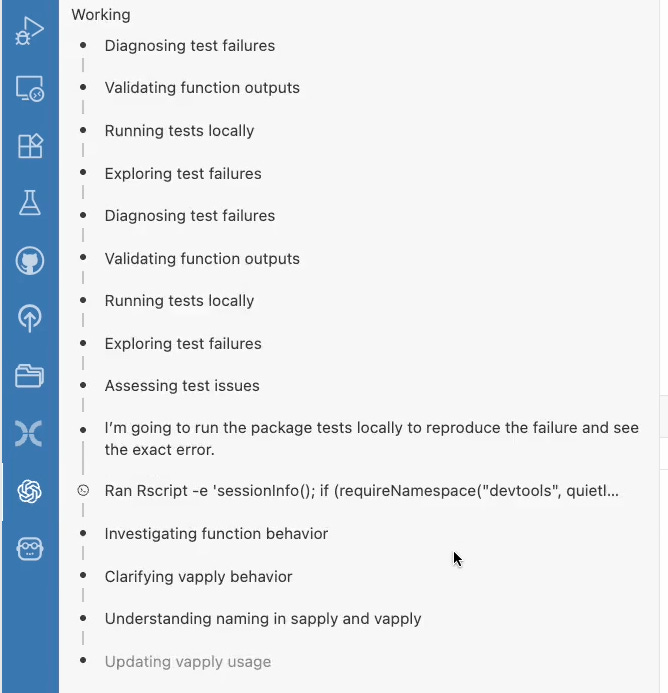

Last month I wrote about agentic coding in Positron using Positron assistant, which uses the Claude API on the back end. Yesterday OpenAI announced a series of updates to Codex, the biggest being an IDE extension to allow you to use Codex in VS Code, Cursor, Windsurf, etc. More details at developers.openai.com/codex.