Though my blog focuses on academic discovery and retrieval, these days you can’t really understand those topics without grappling with concepts like Transformer models, agents, and reasoning.

Though my blog focuses on academic discovery and retrieval, these days you can’t really understand those topics without grappling with concepts like Transformer models, agents, and reasoning.

In the first part of this series, I covered EBSCOhost’s new Natural Language Search (NLS) which uses a Large Language Model (LLM) to expand a user's input query to a Boolean Search Query and used to run over the conventional search system. In this article, I will focus on Web of Science’s Smart Search first launched in April 2025. Similar to the offering from EBSCOhost, this is bundled with your product at no additional cost.

Warning: I do not specialize in digital literacy and my understanding of such matters is limited. Still, I blog this for discussion and learning only. Since 2020, libraries have made a commendable push to arm undergraduates with the tools to navigate our chaotic information landscape.

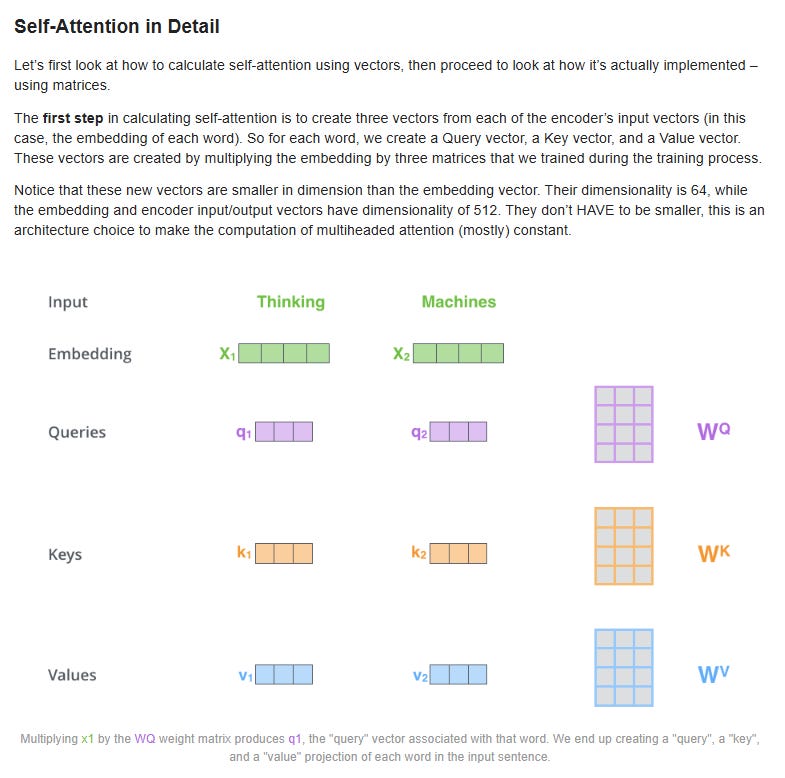

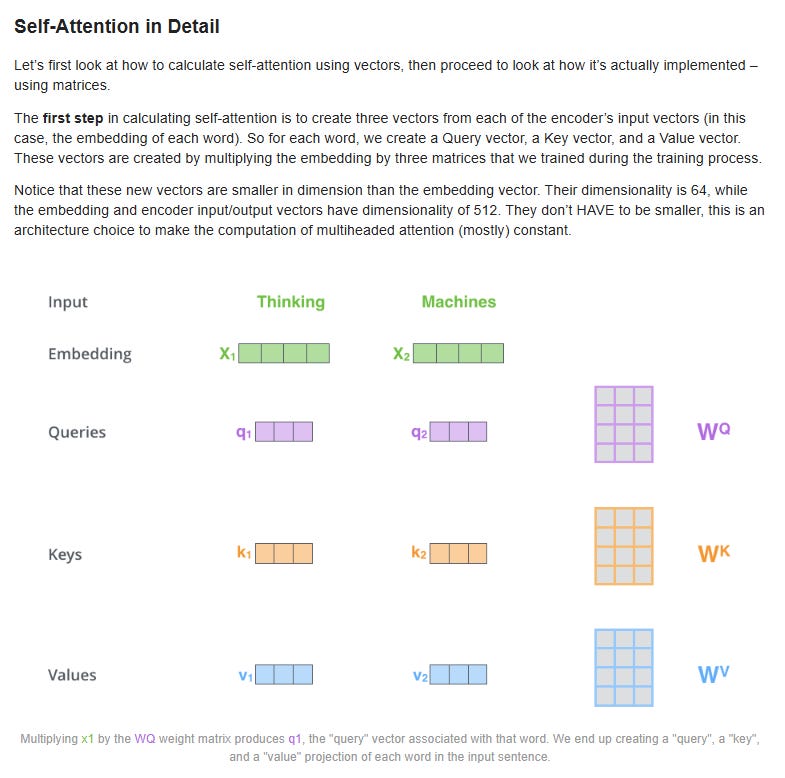

My blog focuses on the two primary ways "AI"—or more accurately, transformer-based models—are impacting academic search. The first, and flashier application is in generating synthesized answers with citations from retrieved articles, a technique known as Retrieval Augmented Generation (RAG). The second, which is the focus of this post, involves the rise of non-lexical or keyword search methods.

Tired of the usual library and scholarly communication conferences in the US or Europe? You're in luck! In 2026, the Asia-Pacific region will host at least two top-class conferences (formerly hosted in US/Europe) that I highly recommend. Thanks for reading Aaron Tay's Musings about Librarianship!

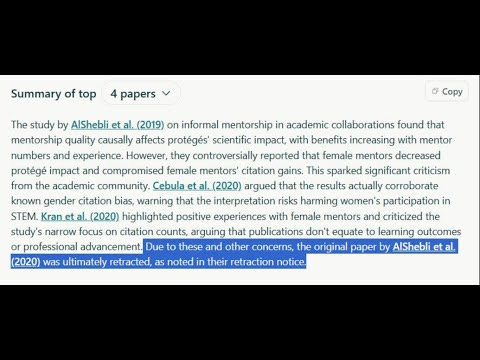

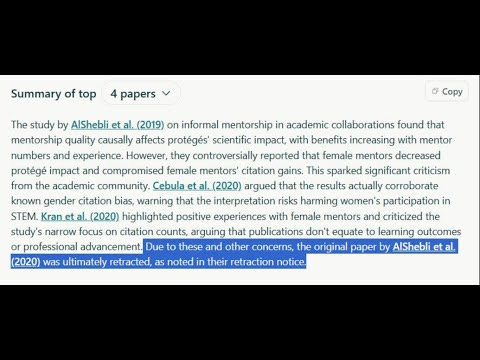

Introduction In my previous post I tested eight academic RAG or RAG‑like tools—Elicit, scite assistant, SciSpace, Primo Research Assistant, Undermind, AI2 Scholar QA, and several "Deep Research" modes from OpenAI, Gemini, and Perplexity—to see how they handled a well‑publicised 2020 paper that has since been retracted : “The association between early‑career informal mentorship in academic collaborations and

Musing sabout librarianship - Substack A Home Full of Memories—And a New Door Opening When I first hit “Publish” on Musings About Librarianship on Blogger back in 2009, I was a wet behind the ears librarian nervously sharing half-baked thoughts. I never imagined that my tiny blogger corner would grow into a gathering place for thousands of curious colleagues around the world.

Academic Retrieval Augmented Systems (RAG) live or die on the sources they retrieve, so what happens if they retrieve retracted papers? In this post, I will discuss the ways different Academic RAG systems handle them, and I will end with some suggestions to vendors of such systems.Thanks for reading Aaron’s Musings about Librarianship!

May was a busy month for me in terms of output. [Article] Comparative review of Primo Research Assistant, Scopus AI and Web of Science Research Output First, I had two pieces of work published in the Katina Magazine that I am quite proud of. First, a comparative review of Primo Research Assistant, Scopus AI, and Web of Science Research Assistant—written by yours truly—was published.

As an academic librarian, I’m often asked: “Which AI search tool should I use?” With a flood of new tools powered by large language models (LLMs) entering the academic search space, answering that question is increasingly complex. Both startups and established vendors are rushing to offer “Deep Search” or “Deep Research” solutions, leading to a surge in requests to test these products.

Following my recent talk for the Boston Library Consortium, many of you expressed a strong interest in learning how to test the new generation of AI-powered academic search tools. Specifically, evaluating systems using Retrieval-Augmented Generation (RAG) was the top request, surpassing interest in learning more about semantic search or LLMs alone. This is a crucial topic, as these tools are rapidly entering our landscape.